8. Hierarchical scheduling

Here we shortly present two kinds of hierarchical scheduling currently available into Cheddar: ARINC 653 scheduling and classical aperiodic task servers.

8.1 ARINC 653 scheduling

In this section, first we show how to model an ARINC 653 system, and then, we present what we can expect from Cheddar in term of schedulability anlysis for such a system.

8.1.1 How to model an ARINC 653 two-levels scheduling

ARINC 653 is a avionic standard characterized by the concept of partitioning. Partitionning ensures time and space isolation in order to improve safety. The ultimate goal is to guaranty that if a failure occurs on one partition, it will not impact the other partitions running on the same hardware. In order to enforce isolation, each partition executes in a fixed time frame and has a specific address space to store code and data. For multi-core systems, the ARINC653 standard required that the operating system support the following possibilities:

- Tasks within a partition are assigned to different cores. Then they are scheduled concurrently on the concerned cores.

- Partitions are assigned to cores. Then, the partitions are scheduled concurrently on the concerned cores In ARINC653, each task in a multi-core system has a task core affinity attribute that links it to a specific core. Then during scheduling, the task is limited to the assigned core. Moreover, each core in the system has a specific set of tasks that will be scheduled on it.

A partition contains at least one task and a two levels of scheduling is applied:

- Partitions scheduling level: for each core, the partitions of the tasks assigned to the concerned core, are based on a cyclic and designed off-line scheduling. Each partition may be cyclically released for a given duration. Partitions have to execute during a cyclic interval called a major time frame (MAF). A MAF is then composed of MIF and partition windows. The set of partition windows give the sequence of partition execution. Each partition window is defined by a duration.

- Tasks scheduling level: each partition have to schedule its tasks. Inside a partition, tasks are usually scheduled according to a fixed priority policy.

With Cheddar, such 2 level scheduling is modeled as follow:

Partitions are modelled by Cheddar address spaces. In Cheddar, a scheduler is optionnal in each address space. To model an ARINC 653, each address space must host a scheduler.* Cheddar supports ARINC 653 scheduling for single-core and multi-core systems i.e. each processor can have one or several cores. For this purpose, monocore, identical multi-cores, uniform multi-cores, unrelated multi-core processors are proposed in Cheddar. * The scheduler assigned to the Cheddar core entity models the partition scheduling. When designing an ARINC 653 system, the core must host the Hierarchical_Offline_Protocol value. With Hierarchical_Offline_Protocol, the address spaces are scheduled off-line, as ARINC653 partition. The ARINC653 MAF of each core, i.e. the sequence of partitions, is store in an XML file. This XML file specifies for the associated core, at which order the Cheddar address spaces which models ARINC partitions, are run.

Cheddar provides others address space scheduler such as Hierarchical\_Cyclic\_Protocol, Hierarchical\_Round\_Robin\_Protocol or Hierarchical\_Fixed\_Priority\_Protocol. They are not ARINC 653 compliant as they do are based on a offline partition scheduling, but may be used to build such partition scheduling. Hierarchical\_Cyclic\_Protocol and Hierarchical\_Round\_Robin\_Protocol run partition/address space in a cyclic way while Hierarchical\_Fixed\_Priority\_Protocol run partition/address space according to thier fixed priority. ,

- Cheddar tasks are both assigned to a core and to an address space, which actually specifies in which partition the task must be run and with which partition/address space offline scheduling the tasks belongs. To be compliant with ARINC 653, each Cheddar address space modeling an ARINC 653 partition must have at least one task.

8.1.2 Examples of an ARINC 653 scheduling

In this section we present two examples of ARINC 653 scheduling with Cheddar. The first example modeles a single core system and the second one modeles a multi-core system.

-

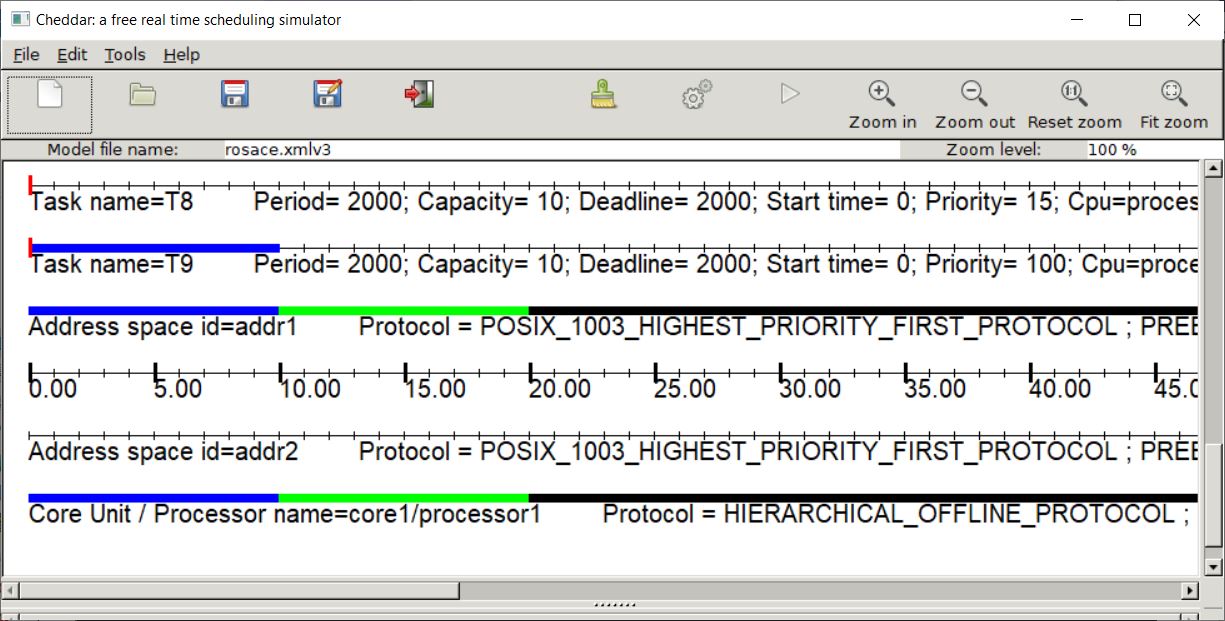

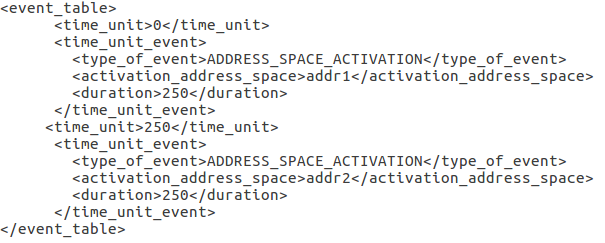

The first example is stored in the file rosace.xmlv3. This model contains 2 partitions modeled by 2 Cheddar address spaces. The partitions MAF is described below:

Partition addr1 is run from time 0 to 250 and then, partition addr2 is run during 250 time unit. Such scheduling is cyclically repeated. This offline partition scheduling is stored in an XML. The one shown above is stored in the file partition_scheduling_rosace.xml.

The rosace.xmlv3 file contains also 15 tasks assigned to the two partitions/address space. The scheduling of this model is shown in the screenshoot bellow. In this screenshot, we see partition addr1 wihch is run fist. We also see T9 run in the beginning of the addr1 time slot. No task from addr2 before addr1 is not completed.

-

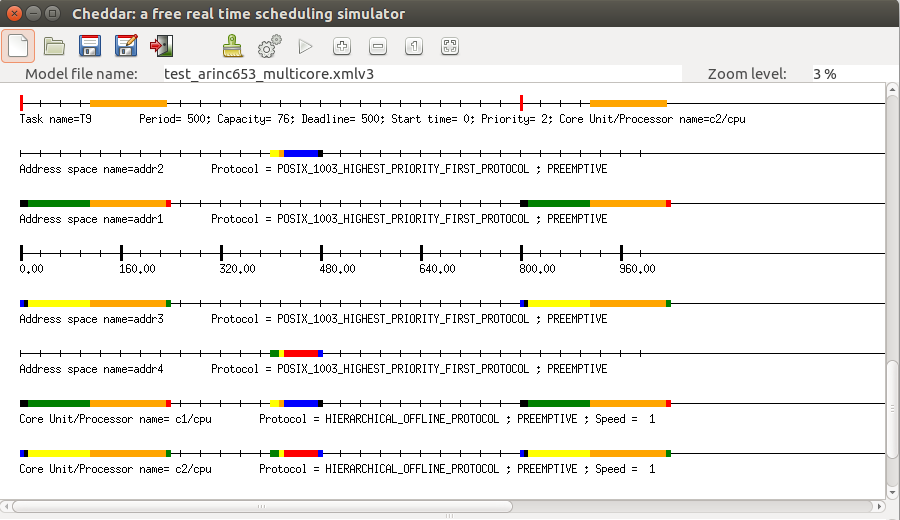

The second example is stored in the file arinc653_multicore.xmlv3. This model contains 4 partitions modeled by 4 Cheddar address spaces which tasks are splited into 2 cores. The partitions MAF of the first core is described below:

Partition addr1 is run from time 0 to 250 and then, partition addr2 is run during 250 time unit. Such scheduling is cyclically repeated. This offline partition scheduling is stored in an XML. The one shown above is stored in the file core1_scheduling.xml. The same scheduling is applied in the second core that host tasks of addr3 and addr4 as shown in the following figure:

The arinc653_multicore.xmlv3 file contains also 18 tasks assigned to the 4 partitions/address spaces. The scheduling of this model is shown in the screenshoot bellow. In this screenshot, we see partition addr1 (resp. addr2) and addr1 (resp. addr4) running in parallel.

8.2 Aperiodic server hierarchical scheduling

Cheddar implements several classical aperiodic servers. This paragraph illustrates this with the polling server.

Aperiodic servers is a mean to adapt scheduling of aperiodic tasks with fixed priority scheduling and Rate Monotonic priority assignment. Several approaches have been proposed to handle aperiodic tasks in this context.

The polling server is one of these approaches. Basically, a polling server is a periodic task defined by a period, a priority and a capacity. When aperiodic tasks are released in the system, they are stored in a queue. At each periodic release time of the polling server, it runs aperiodic tasks which are waiting for the processor in the queue. The polling server runs aperiodic tasks for the duration of its capacity. If the aperiodic task queue is empty at the polling server's release times, the server does nothing. It waits for its next periodic release time. This mechanism allows the processor to run aperiodic tasks as soon as possible but without delaying periodic tasks that are critical. Notice that a polling server has a fixed priority: if this priority is high, it may reduce latency for aperiodic tasks. Whatever this priority and the number of arriving aperiodic task, a polling server can be taken into account when schedulability of the system is verified. Then, as the polling server never exceeds its capacity, schedulability is never jeopardized.

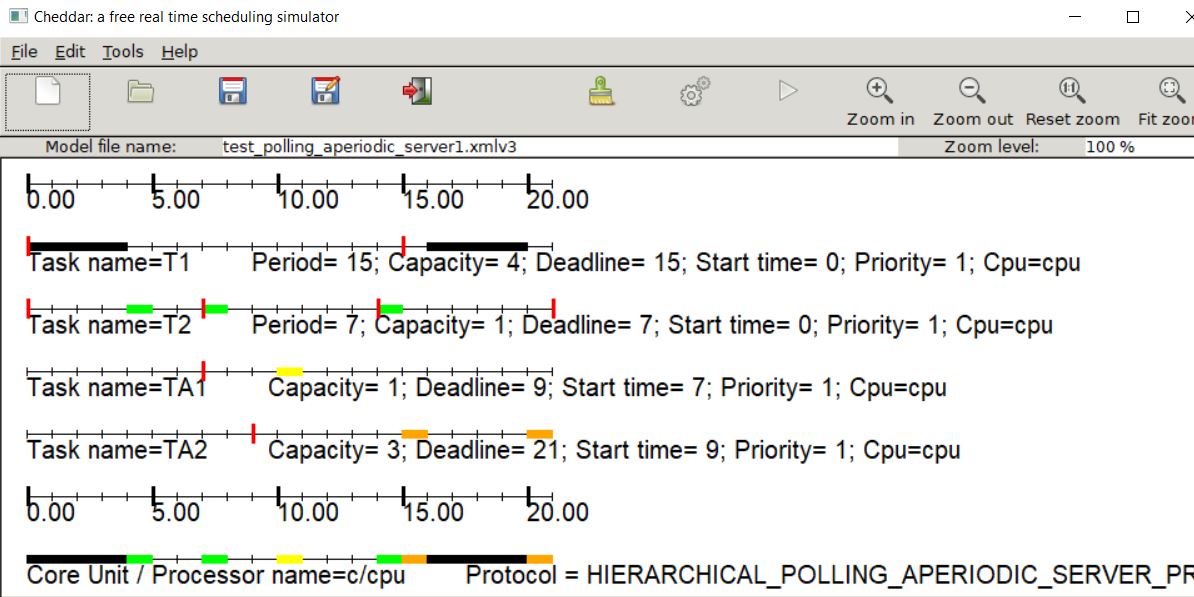

To play with a polling server with Cheddar, the main element to configure properly is a core. For such scheduling algorithme, the core must be used in a monoprocessor. Here is an example of valid parameters for a polling server:

The core c has a high priority value (value of 100), which means that aperiodic tasks will be run quickly. Low scheduling latency of aperiodic tasks is also brought because of the value of the polling server, which is about 5 here. Finally, we give 20 percent of the processor to aperiodic tasks as the capacity of the server is about 1. Fianlly, notice the scheduler type specified here: Hierarchical_Polling_Aperiodic_Server_Protocol.

The screenshoot above shows how the previous core can be used to perform a scheduling simulation. We have two periodic tasks (T1 and T2) and two aperiodic tasks (TA1 and TA2) respectively release at time 7 and 9, with a capacity of respectively 1 and 3. Notice that before time 7, the aperiodic server does nothing, but after time 10, i.e. after its next release with an aperiodic task is ready to run, the polling server used its 1 unit capacity to spread the execution of the aperiodic tasks present in its queue. Aperiodic tasks are then run at 10, 15 and 20 in this figure, while never joeoardizing T1 and T2 deadlines.